Updates from VisionLib – Release 2.1 Available

With Release 2.1 we introduce Texture Color Model Tracking, which enables to use texture information of tracked objects to enrich tracking with color edges extracted from the model’s textures. Also, the sync of tracking and displaying content when using ARFoundation together with VisionLib has improved. Additionally, performance of Multi Model Tracking got improved as well. Read on to learn about all updates.

You can download the new release at visionlib.com/downloads. Need help installing VisionLib? Here is an overview on all VisionLib packages and how you install VisionLib for Unity through UPM.

Texture Color Model Tracking:

Improve the Line Model with Edges extracted from Textures

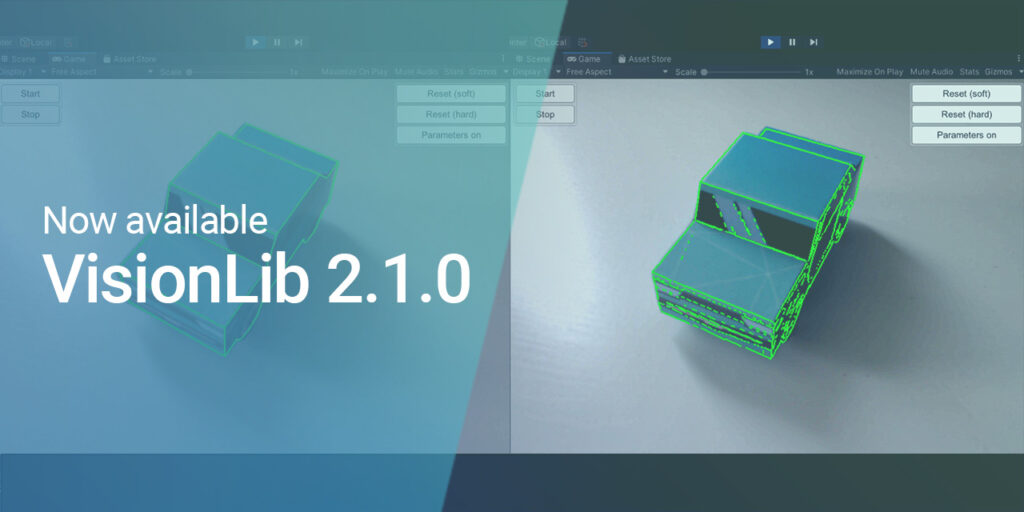

With Release 2.1 we introduce a new feature called Texture Color Model Tracking. It enables using texture information of tracked objects to enrich tracking with color edges extracted from their texture.

Usually, model tracking generates a line model by only using geometric information. With this feature active, you enable VisionLib to derive line model information of a model target’s texture, too.

Using textures to enhance the line model is perfect when the geometry of a tracking reference’s 3D model isn’t ideal to derive nice contour information. So this functionality enriches options to enhance the line model and subsequently tracking results.

We’ve added a new demo scene to the example package, so you can try the new feature using the paper-craft car (our demo tracking object) and play around with its options. The textureColorSensitivity parameter controls the amount of edges extracted from the texture. Note, that the new feature is disabled by default.

You are currently viewing a placeholder content from YouTube. To access the actual content, click the button below. Please note that doing so will share data with third-party providers.

More Information

More News & Updates –

Besides the new feature, which uses a model’s texture to enrich tracking information, we also like to point out these changes and improvements:

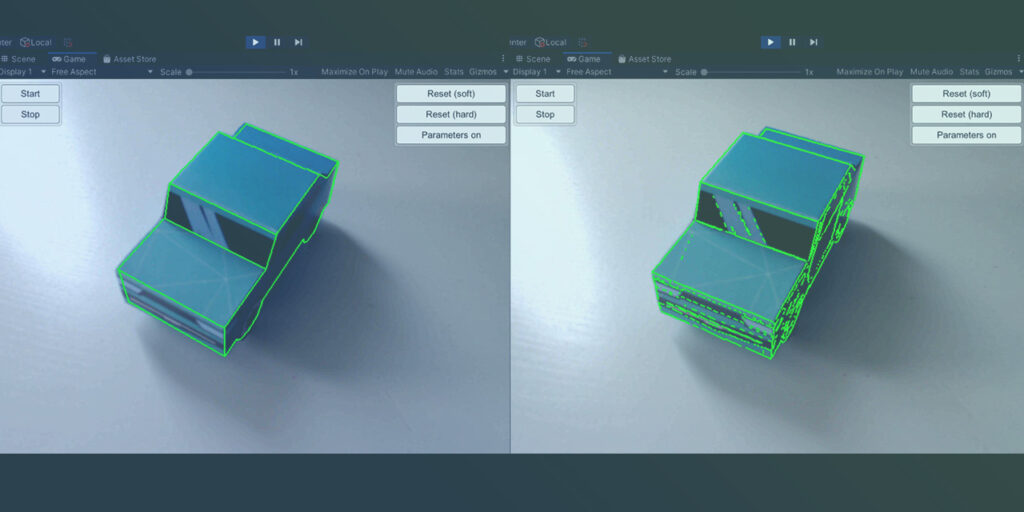

- The synchronization of tracking and displaying content has improved as well, when using ARFoundation together with VisionLib. Augmentations will now appear much smoother during tracking.

You are currently viewing a placeholder content from YouTube. To access the actual content, click the button below. Please note that doing so will share data with third-party providers.

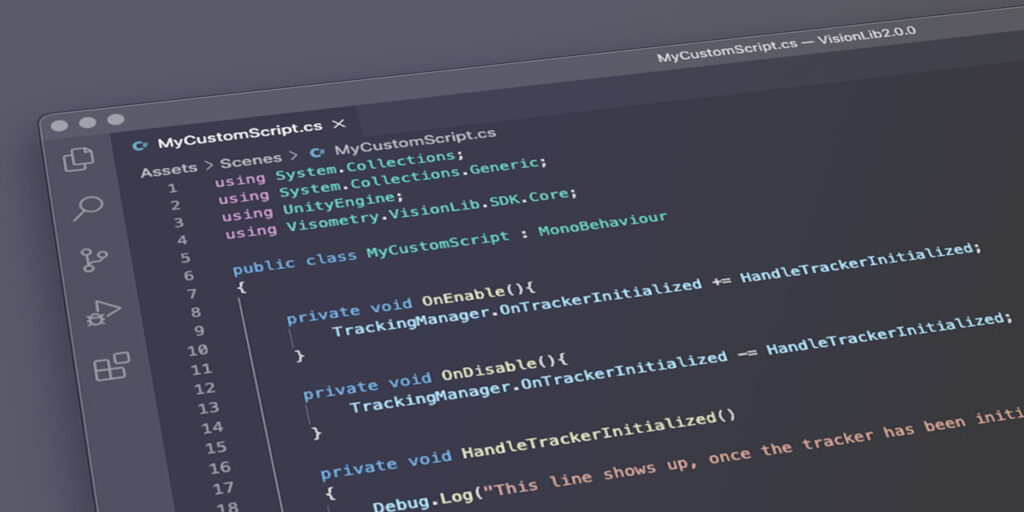

More Information- When using Multi Model Tracking in Unity, you now can set an init pose via the transform of a tracking anchor, by using the public

SetInitPoseorSetInitPoseAsyncmethod of the TrackingAnchor script component. This enables you to use init poses in Multi Model Tracking setups easier. - Also, by eliminating unnecessary image copies, the performance of Multi Model Tracking has improved significantly.

- With the new parameter

enableEdgeFilter, we give more control when tracking objects without repeating patterns or repetitive structures. With this enabled, VisionLib is able to ensure tracking won’t get stuck in these elements. You can now disable this processing-intense task to reduce memory consumption and make tracking faster, whenever your tracked objects don’t have such structures. If you’re not certain, leave this parameter untouched. It is enabled by default.

Read the full change log the get all the details.

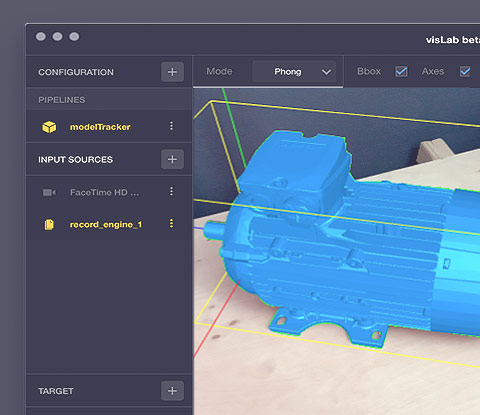

With every new VisionLib release, there is an update to VisLab. Get it now.

Learn VisionLib – Workflow, FAQ & Support

We help you apply Augmented- and Mixed Reality in your project, on your platform, or within enterprise solutions. Our online documentation and Video Tutorials grow continuously and deliver comprehensive insights into VisionLib development.

Check out these articles: Whether you’re a pro or a starter, our new documentation articles on understanding tracking and debugging options will help you get a clearer view on VisionLib and Model Tracking.

For troubleshooting, lookup the FAQ or write us a message at .