That's new in Release 20.1.1

VisionLib Release 20.1.1 is now available at visionlib.com.

The new year starts with a major VisionLib Release: it comes with optimization updates to Auto-Init and a full revision to VisionLib’s Android support, which brings ARCore Fusion. And, we’ve added a true highlight feature: Multi Model Tracking!

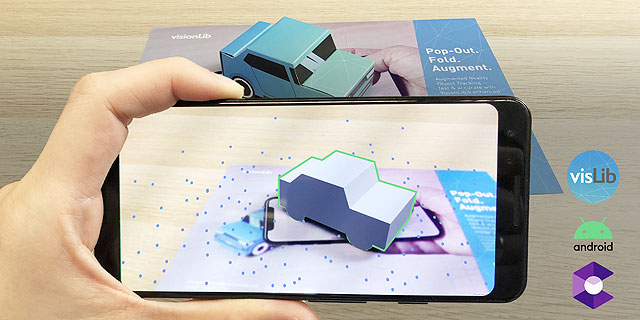

Introducing: Simultaneous, multi object tracking with ›Multi Model Tracking‹

At AWE EU 2019 we’ve already given an exclusive sneak peek to multi model tracking. Now, it’s included in the new release. You have the chance to get access to its beta core functionality. It enables you to track objects separately at the same time. So, when you move these objects freely in different directions, VisionLib will track them, once detected.

Imagine, you wanted to track separate parts of the same machine, or moving parts of a vehicle’s interior? Now you can distinguish components (such as eg. steering wheel and cockpit) and track them separately.

This adds a whole new quality to enterprise use cases, and to Augmented Reality object tracking as a whole.

Have a look at our example scene and test it with the new do-it-yourself papercraft test targets. For more information on how to implement this feature, refer to the multi model documentation.

Vastly Renewed: Android & ARCore Support

If you didn’t use VisionLib on Android before, now is a good time to reconsider. It is nothing less but a whole new integration. VisionLib now handles Android’s native calibration data, supports ARCore and combines VisionLib’s Model Tracking with the platform’s core AR functionality far better: this lets you utilize object tracking from VisionLib and SLAM tracking from the platform at the same time.

Tech Demos & Featured Customer Cases on YouTube

We created small “tech demo” videos, which show all new features in action. Check out our youtube playlist. Definitely worth a look. We are also excited about what people have built with VisionLib so far. We created a channel of featured ›Made with VisionLib // Customer Demos & Stories‹. Interested in having your work linked there, too? We’d be honored to do so. Give us a note, and have your porject or demo shared.

Improvements & Revisions: Speed & Functionality

We are also working on improvements that make VisionLib more stable and ready for future developments. With Multi Model Tracking available in this release, we’re heading for even more machine-vision-based capabilities and services. There is much to come to XR. Join us on the journey and stay tuned.

Learn VisionLib – Workflow, FAQ & Support

Augmented Reality is in the midst of today’s technology portfolio. But when it comes to complex AR cases, working with computer vision tracking can become intense. That is why we have created several sources to get you started and to help you, when things get complex.

Check our new Using VisionLib pages with an overview on how-to get development started, as well as workflow and tool recommendations.

For troubleshooting, lookup the FAQ or write us a message at .